Why Scaling Data Teams Can Quickly Drain Your Budget (and How to Prevent It)

Scaling your data team should be an exciting phase, signaling growth and increased data maturity. However, it’s also a critical juncture where many companies unintentionally fall into common and costly traps:

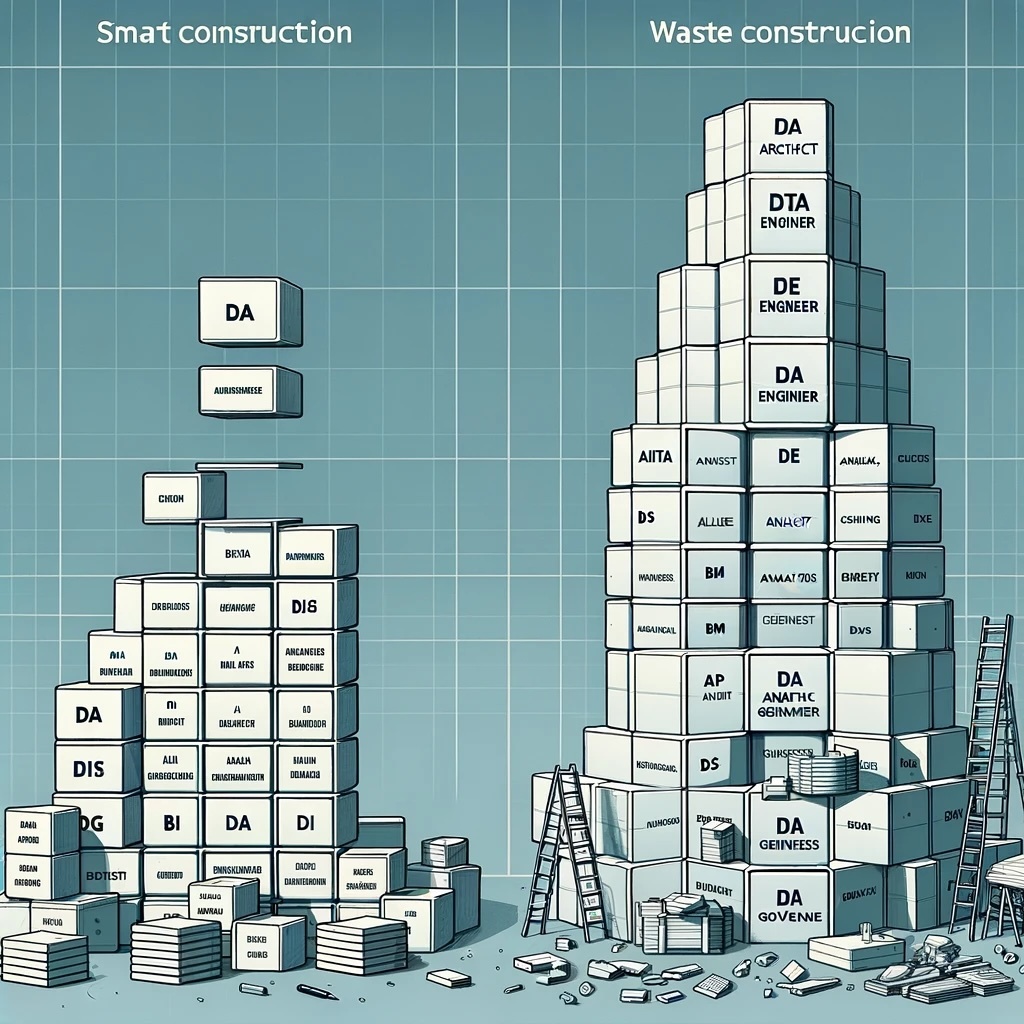

- Premature Specialization = Budget Bloat: Hiring highly specialized roles (think AI wizards and ML gurus) too early, before establishing foundational data infrastructure and clear business use cases. This leads to a top-heavy team and inflated payrolls without immediate ROI.

- Analytics Before Architecture = Wasted Investment: Investing heavily in advanced AI and analytics initiatives before setting up clean, reliable, and structured data pipelines. It’s like buying a sports car before building roads – nowhere to effectively drive it.

- Outsourcing Reliance Without a Strategy = Long-Term Cost Leakage: Over-relying on external vendors and consultants without a clear long-term strategy for internal capability building and cost efficiency. Short-term fixes can become expensive long-term dependencies.

The key to scaling successfully and cost-effectively? Adopting a phased, budget-conscious approach. This means strategically balancing in-house hiring, targeted outsourcing, and smart technology investments – all while rigorously avoiding unnecessary overspending. Let’s dive into the tactics.

Hiring vs. Outsourcing: Finding Your Optimal Balance

One of the most crucial decisions when scaling your data team is determining the right mix of in-house hires versus outsourcing. There’s no one-size-fits-all answer, but here’s a framework to guide your choices:

When to Prioritize In-House Hiring:

- Long-Term Data Capability Building: When you view data as a core, strategic asset and aim to build lasting in-house expertise and institutional knowledge.

- Deep Business Context is Essential: When roles require a profound understanding of your specific business domain, internal data landscape, and unique organizational nuances (e.g., roles focused on internal data governance, deeply embedded business intelligence).

- Building Proprietary Data Products & Core AI Models: When you are developing unique, company-specific data-powered products or core AI algorithms that form a competitive advantage and require ongoing internal ownership and iteration.

Best In-House Roles to Start With:

- Data Architect: To design and maintain a scalable, robust, and future-proof data infrastructure. This role is foundational for long-term data success.

- Data Engineer: To build and manage your essential ETL/ELT pipelines, ensuring consistent data flow and quality. The data plumbing of your organization.

- Business Intelligence Analyst: To directly support internal business teams with reporting, dashboards, and actionable insights, bridging the gap between data and business users.

When Strategic Outsourcing Makes Sense:

- Specialized & Niche Expertise for Specific Projects: When you require highly specialized skills (e.g., cutting-edge NLP, computer vision) for short-term, project-based needs, rather than ongoing, broad application.

- Speed & Agility Are Top Priorities (e.g., Proof of Concepts): When you need to rapidly launch a proof-of-concept, pilot project, or quickly augment your team to meet urgent deadlines.

- Limited Immediate Access to Internal Talent: When you face immediate skill gaps internally and outsourcing provides a faster route to access specialized expertise compared to lengthy recruitment and onboarding.

Best Roles & Functions to Consider Outsourcing (Initially):

- Machine Learning Engineers (for Advanced AI Solutions): For developing and deploying complex AI/ML models, especially in the early stages of AI adoption when full-time expertise may not yet be justified.

- Data Scientists (for Experimental Modeling & Research): For exploratory data analysis, advanced statistical modeling, and R&D- focused data science initiatives, particularly for projects with uncertain or evolving requirements.

- AI Consultants (for Strategy, Implementation, and Audits): For initial AI strategy development, roadmap creation, implementation guidance, and independent audits of your data and AI initiatives.

Key Takeaway: Adopt a hybrid approach. Begin by strategically hiring core, foundational roles in-house for long-term data capability. Simultaneously, leverage targeted outsourcing for specialized, project-based needs and niche expertise until internal demand and ROI clearly justify full-time hires in those areas. Start core, scale strategically.

Cost Efficiency Tactics: Practical Strategies for Smart Scaling

Beyond the hire vs. outsource decision, here are three proven cost-saving strategies to scale your data team efficiently and avoid budget overruns:

- Strategically Leverage Cross-Functional Roles in Early Stages:

- The Tactic: Instead of immediately hiring siloed specialists for every data function, prioritize hiring versatile, cross-functional data professionals who can capably handle multiple related tasks and responsibilities, especially in the initial phases of team building.

- Practical Examples:

- In early-stage data teams, prioritize Data Engineers who possess solid analytical skills and can also contribute to basic data analysis and dashboard creation, before adding dedicated BI Developers.

- A well-rounded Data Architect, particularly in smaller organizations, can often initially oversee data governance responsibilities, postponing the immediate need for a separate, full-time Data Governance Lead.

- Cost Savings Realized: By strategically leveraging cross-functional skills, you can significantly reduce upfront hiring costs. Instead of immediately needing 5-7 specialized roles, you may realistically require only 2-3 carefully selected, versatile professionals in your initial scaling phase.

- Implement Phased Team Scaling Directly Tied to Business Needs & Data Maturity:

- The Tactic: Avoid premature, large-scale hiring. Instead, meticulously phase in your data team’s growth in direct alignment with evolving business demands and your organization’s increasing data maturity. Don’t build for tomorrow’s hypothetical needs today.

- Recommended Phased Hiring Approach:

- Cost Savings Realized: This phased approach prevents overspending on specialized, high-cost roles before your business has validated clear use cases and developed the foundational data infrastructure to support their effective contribution. Avoid building an advanced AI lab when you haven’t even laid the data groundwork.

- Proactively Avoid Redundant Role Hiring:

- The Tactic: Carefully analyze role responsibilities to prevent unintentional overlaps in functionality and avoid hiring redundant roles that perform essentially the same tasks under different titles. Streamline roles and responsibilities for maximum efficiency.

- Common Redundancy Pitfalls to Avoid:

- Hiring Data Engineers and Data Scientists Simultaneously (Too Early): Prioritize Data Engineers first. Ensure your data is robustly collected, cleaned, and properly structured before investing in Data Scientists whose effectiveness heavily relies on high-quality data.

- Adding Machine Learning Engineers Before AI-Ready Data Infrastructure: First, focus on building a mature BI and data analytics capability. Then, strategically transition to specialized AI/ML roles when your data infrastructure and validated use cases justify the investment in advanced AI.

- Key Takeaway: Hire strategically based on demonstrated business need and sequential data maturity, not based on industry trends or perceived prestige of certain data science roles. Prioritize essential roles first, and meticulously avoid functional redundancy.

Using Technology to Radically Optimize Costs

Beyond strategic hiring and outsourcing, smart technology investments can be a game-changer in achieving a truly cost-efficient data team operation. Here are three key technological levers to pull:

- Implement Automation for ETL/ELT & Core Data Processing:

- The Tactic: Aggressively automate data pipeline development, data transformation, and routine data processing tasks using modern automation tools. Reduce manual coding and repetitive engineering work wherever possible.

- Technology Examples:

- For Automated Data Transformation: AWS Glue, dbt (data build tool), Fivetran.

- For Workflow Automation & Orchestration: Apache Airflow, Prefect.

- Cost Savings Potential: Strategic automation of ETL and data processing can realistically reduce the need for additional Data Engineers by an estimated 20-30%, freeing up valuable engineering time for more complex, high-impact tasks.

- Transition to Cloud-Based Data Infrastructure:

- The Tactic: Migrate away from expensive on-premise infrastructure and fully embrace scalable, cost-optimized cloud platforms for data storage, processing, and analytics. Leverage the inherent pay-as-you- go model of the cloud.

- Cloud Platform Examples:

- Scalable Data Warehousing: AWS Redshift, Google BigQuery, Snowflake.

- Cost-Effective AI & Data Processing: Databricks, AWS SageMaker, Azure Machine Learning.

- Cost Savings Potential: Migrating to cloud-based solutions eliminates massive upfront infrastructure capital expenditures. You

transition to a flexible, consumption-based pricing model, paying only for the data storage and computing power actually utilized, resulting in significant long-term infrastructure cost savings and scalability.

- Strategically Leverage AI for Reporting & Business Analytics:

- The Tactic: Explore and implement AI-powered tools to automate aspects of routine reporting, basic data analysis, and initial insight generation. Empower business users with self-service capabilities, reducing reliance on large BI teams for standard requests.

- Technology Examples:

- Self-Service BI & Dashboarding: Looker, Power BI, Tableau.

- AI-Driven Analytics & Automated Insights: Google AutoML, Azure Machine Learning automated ML, Tableau Ask Data/Explain Data features.

- Cost Savings Potential: Implementing self-service dashboards and incorporating AI-assisted analytics can realistically reduce overall reporting overhead and potentially decrease the need for large BI teams by an estimated 30-40% over time, freeing up analyst time for more strategic, in-depth investigations.

Key Takeaway: Smart technology investments, especially in automation and cloud migration, are not just about modernization; they are powerful levers for fundamentally reducing operational costs, optimizing team size, and maximizing the cost-efficiency of your data function. Technology enables lean and agile data teams.

Detailed Cost Breakdown: Planning Your Data Team Budget Effectively

Want a more granular, detailed breakdown of typical data team role salaries, outsourcing costs, technology platform pricing, and a customized cost planning template to budget effectively for your data team? Reach out to our consulting team for a personalized cost analysis!

Final Thoughts: Scaling for Impact, Not Just Size

The ultimate goal is not just to scale your data team to be as large as possible, but to scale it smartly and efficiently to maximize business impact while remaining rigorously cost-conscious. Remember these core principles for efficient scaling:

- Start Lean, Scale Judiciously: Begin with only the most essential roles in your early phases of data team development.

- Outsource Strategically First: Prioritize outsourcing specialized skills and niche expertise before immediately committing to full-time hires in those domains.

- Automate Relentlessly: Aggressively automate routine data processes and workflows to demonstrably reduce staffing needs over time.

- Cloud-First Infrastructure: Embrace cloud-based solutions as your default infrastructure to significantly lower upfront and ongoing infrastructure expenditures.

By adhering to these principles, you can build a powerful, high-impact data team that scales sustainably and cost-effectively, driving significant business value without breaking the bank.

Cost-effective scaling hinges on strategic growth. For the full blueprint to building effective data teams, be sure to revisit our earlier posts on laying your team’s foundation and aligning with business goals. Let’s build your data success, step by step.